Build your personal AI copilot

A guide to using AI as a long-term thinking partner (including prompts to get you started)

👋 Welcome to a ✨ free edition ✨ of my weekly newsletter. Each week I tackle reader questions about building product, driving growth, and accelerating your career. For more: Lenny’s Podcast | How I AI | Lennybot | Lenny’s Reads | Courses | Swag

If you’re not a subscriber, here’s what you’ve been missing:

Annual subscribers now get a free year of Bolt, Perplexity Pro, Notion, Superhuman, Linear, Granola, and more. Subscribe now.

One of my favorite collaborators, Tal Raviv, is back with another incredible piece that will change how many of you operate at work. If you’re looking for the promised AI productivity gains everyone’s talking about, start here.

P.S. You can now also listen to these posts in convenient podcast form: Spotify / Apple / YouTube.

For more from Tal, check out his upcoming free lightning lesson on how to use Cursor as a PM copilot, his free recordings of advanced copilot use cases, and his “Build Your Personal PM Productivity System & AI Copilot” course coming up in September (use LENNYSLIST for $100 off). You can also book Tal for one-day team workshops with your team.

Here’s my embarrassing story: 11 months ago I wasn’t using AI at work—at all.

My team and I were building AI products used by tens of thousands of people. But when it came to using AI in my own job, I was a proud Luddite.

I didn’t want to sound like “the average of the internet.” I was worried that if I let AI tools do things for me, I’d lose my edge. When I tried using ChatGPT, I found it disappointing for strategic and innovative work—like consulting a chatty Wikipedia.

Deep down, I felt discouraged that I didn’t know the exact magic spells of prompting that only influencers seemed to know.

Then my engineering team kicked off a new, paperwork-intensive version of Scrum for a large initiative that required me to write dozens of detailed user stories. I couldn’t keep up, and all of a sudden I became my team’s bottleneck.

I did what any experienced product manager would do: I whined and complained. Eventually my eng manager, Oleksii, took pity on me and showed me how to write detailed, near-perfect user stories with ChatGPT (which I covered in my discussion on Lenny’s Podcast!).

Oleksii instructed me to (1) paste in an example template of a user story and (2) use speech-to-text as I blabbed on and on about how the product experience should work. I also ended up dictating the background story of the initiative to ChatGPT, just like I would at a team kickoff.

ChatGPT replied with user stories that were concise, thoughtful, and airtight. The edge cases were extremely relevant, as if it had been a member of the team for months. Not only did the results translate my ramblings to engineering’s structured template, but each user story also included stipulations (mobile responsiveness, accessibility, pricing tiers) that I’d often forget.

The results were nearly perfect, and my fingers never even touched the keyboard. ChatGPT was exhibiting superpowers I didn’t know existed.

I had a hunch that mere user stories were just the beginning of how I could use it at my job. Could AI help me with higher-level tasks? Could it answer the ultimate question of knowledge work: What is the most important thing I should do next?

I realized that AI tools had felt like blunt, generic instruments because I wasn’t providing enough context. LLMs can be highly effective for intelligent knowledge work, but only when we provide them with the same background knowledge that any person would need to do the same work.

Recent advances in LLMs allow us to maintain (and grow) this context over time and across conversations, the same way we might keep a colleague in the loop. And we can even instruct LLMs to use consistent behaviors, values, and guidelines for interacting with us.

When LLMs have this valuable and ongoing context about our goals, our role, our projects, our team, and our wider org, they become our “AI copilot”—a real thinking partner for long-term, complex work. Remember my fear of losing my edge? When we treat LLMs as partners that inspire rather than replace our thinking, our output only gets sharper.

An AI copilot might be the least “agentic” way of using AI, and that’s the point. As AI tools proliferate and become more capable, our AI copilot is there to support us and make us better at our jobs. An AI copilot can work with us, not just for us. And that can make all the difference.

Over nearly a year now, I’ve taught workshops to more than 20,000 tech employees and founders on how to leverage AI for complex, innovative tasks at work. I can confirm: regardless of company size, vertical, or fluency in AI, many tech workers today are missing out for the same reason I was. They’re not giving LLMs the context needed to experience their full potential.

Below, I’ll share how to change the way you think about working with AI, and how to practically construct your own AI copilot.

What are AI copilots good for?

Leveraging a copilot isn’t about AI producing perfect answers—it’s about getting the most out of ourselves. Daniel Kahneman won the Nobel Prize in economics for his collaboration with Amos Tversky, and I love his reflection: “I am not a genius. Neither is Tversky. Together, we are exceptional.”

After we work together to build AI copilots, my students start using LLMs to help them make strategic decisions, brainstorm roadmap ideas, develop their soft skills, and even support them emotionally. Their copilots challenge their thinking, analyze data, apply decision frameworks, prioritize, and help rehearse hard conversations.

AI copilots also make it infinitely easier to vibe code an AI prototype, run meaningful data analysis, launch an AI automation, generate AI slide decks, and insert-AI-tool-we-don’t-even-know-about-yet. Because our copilots hold up-to-date context about us and our work, we’re often one chat message away from getting a detailed prompt we can take anywhere else.

And yes, AI copilots can also draft PRDs, user research plans, meeting agendas, and Slack updates. (When you regularly loop LLMs into your thinking, generating documents feels like an afterthought.)

Months after building a copilot, students tell me they “use it every day” and it’s “always open at work.” Their copilots keep them focused, remind them who to talk to, catch nuances they would have missed, and turn them into superpowered contributors who can analyze data and discuss technical concepts. They move so fast that leadership taps them to teach everyone else how they got so productive.

And they do! One student created a career development copilot for women on her team. Another is piloting AI coaching for sales managers doing one-on-ones. A third built an onboarding copilot when joining a new company. Two weeks in, when he presented his product strategy, his CEO remarked, “It feels like you’ve been here for months.”

How do I build an AI copilot?

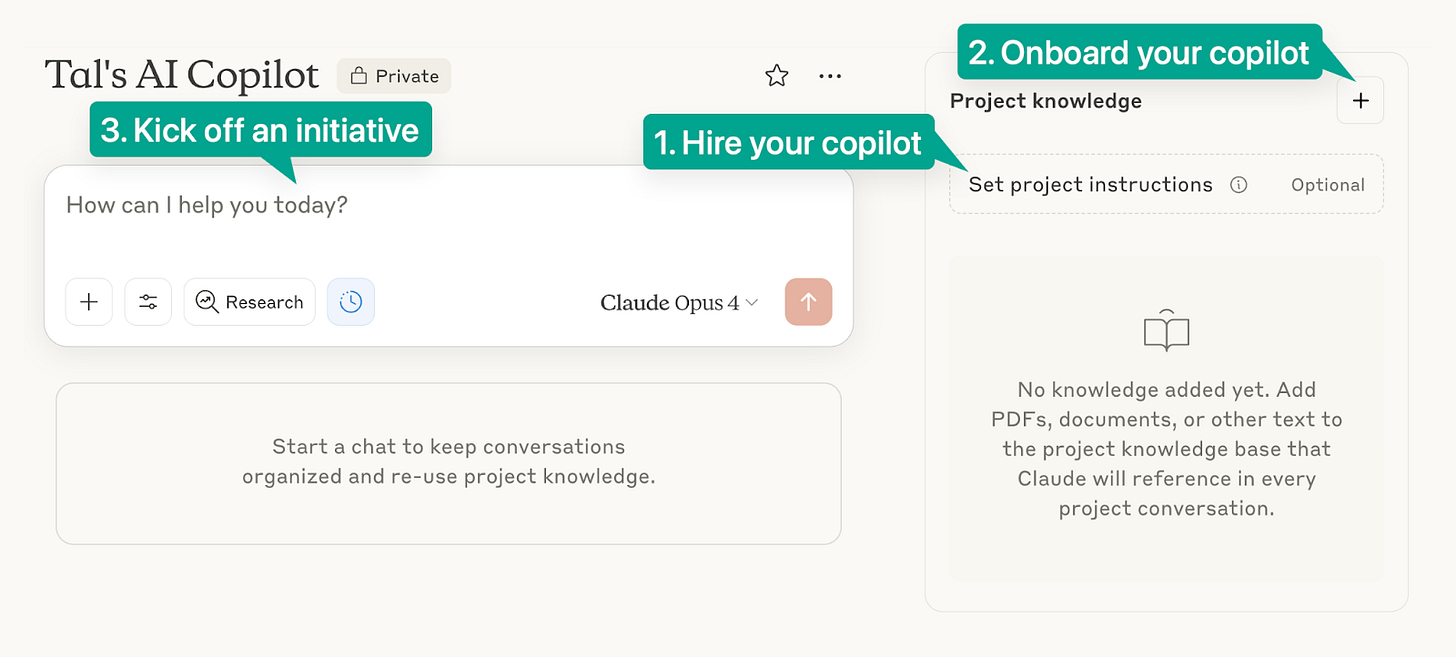

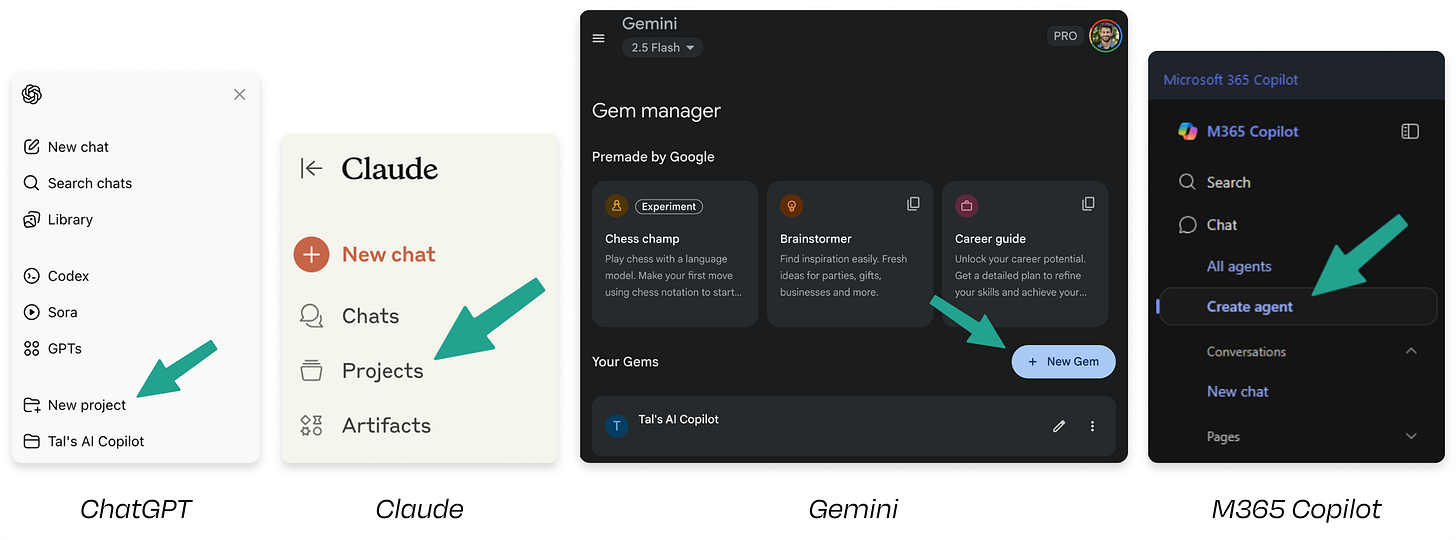

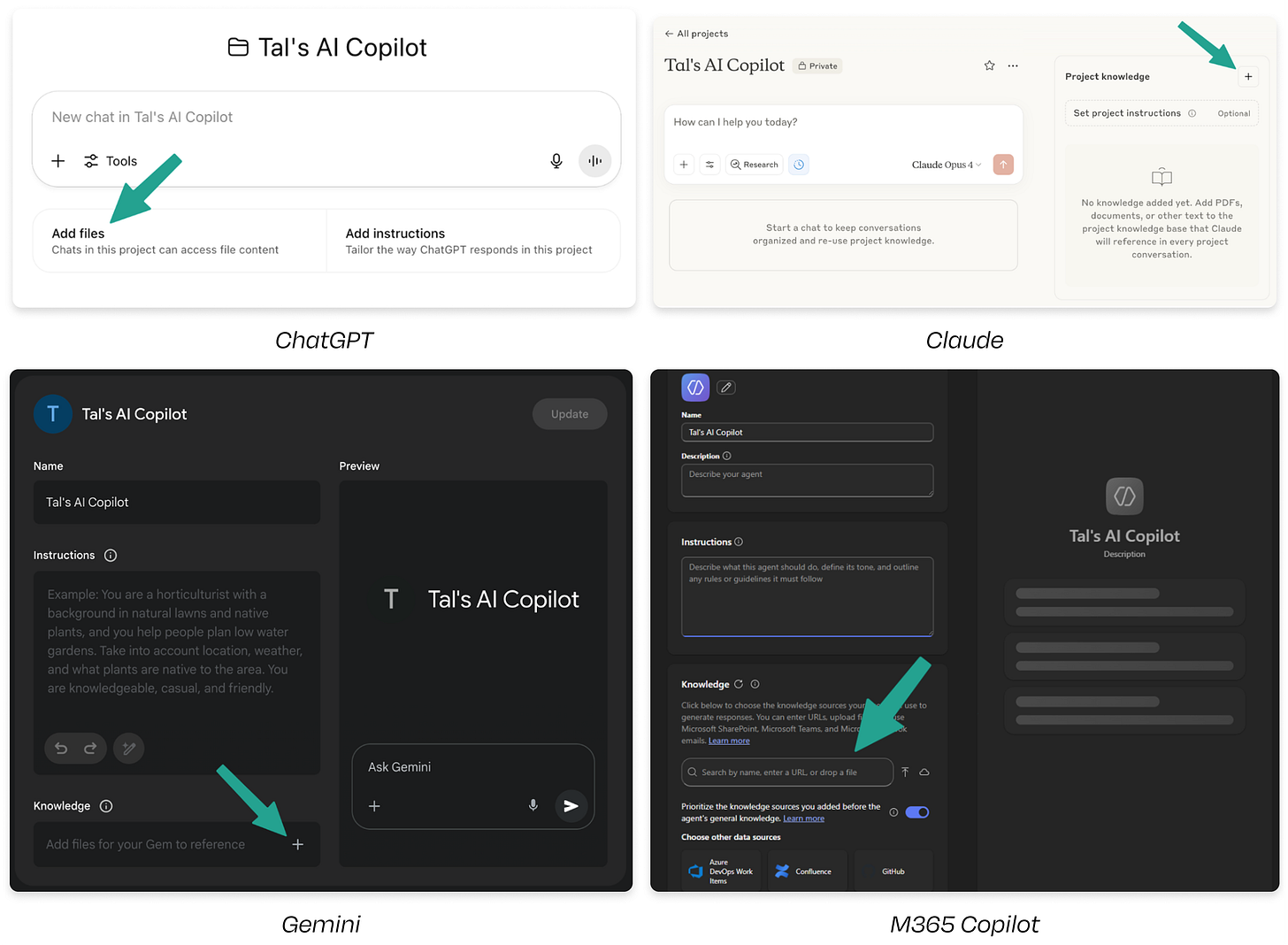

You can build a valuable AI copilot in a number of ways—anything from keeping a long chat thread to creating a geeky Cursor repository. But my preferred method is using the “Projects” feature in my favorite LLM. Projects are available in ChatGPT, Claude, M365 Copilot, and Gemini’s paid and enterprise plans. (The latter call them “declarative agents” and “Gems,” respectively.)

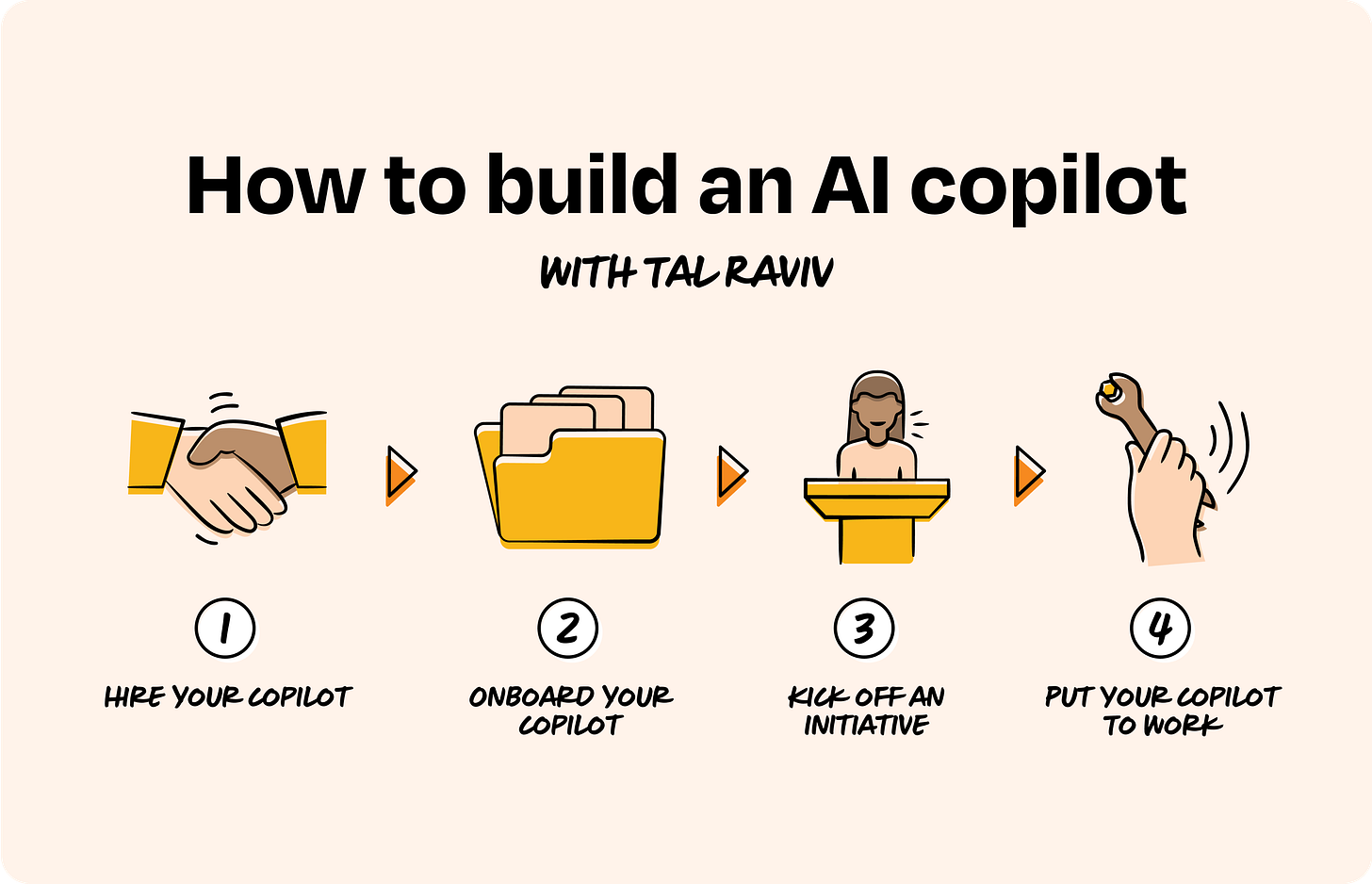

With Projects, building your AI copilot is much like bringing on a new teammate. Each project is made up of three elements: project knowledge, instructions, and chat threads. And each of these elements corresponds with a step in the onboarding process:

Hire your copilot: Use instructions to set your copilot’s role, personality, and behaviors.

Onboard your copilot: Fill out the project knowledge with company and org-level documents that act as shared context across all future conversations with your copilot.

Kick off an initiative: Begin a chat thread for each initiative you’re working on.

Put your copilot to work: Create simple, conversational prompts.

With this framework in mind, open your favorite LLM (upgrade to paid if you haven’t already), navigate to Project/Gem, and let’s get hands-on.

What if your LLM at work doesn’t have projects? No worries, I’ve included a workaround for you at the end of this post.

Step 1: “Hire” your AI copilot

The first step is to create a new project. I’m naming mine “Tal’s AI Copilot.” Don’t worry about giving it a description.

Inside our project, we’ll “hire” our thinking partner by deciding what values and behaviors we want it to have. Like when selecting a colleague or a coach, we’ll choose traits that transcend the initiatives we’re working on, company strategy, or what quarter we’re in.

Projects allow us to do this by defining instructions. Think of these as the “super-prompt” that the LLM applies throughout the conversation, slightly stickier than any prompts you type into a chat conversation. (If you’ve worked on an AI product, you might recognize this as extending the “system prompt.”)

To get you started, copy and paste the following prompt into your project’s instructions. Make sure to fill in the placeholders with what’s relevant to your role:

I am a [role] at [company name], and you are my expert coach and advisor, assisting and proactively coaching me in my role to reach my maximum potential.

I will provide you with detailed information about our company, such as our strategy, target customer, market insights, products, internal stakeholders and team dynamics, past performance reviews, and retrospective results.

In each conversation, I will provide you with information about a particular initiative so you can help me navigate it.

I expect you to: ask me questions when warranted to gain more context, fill in important missing information, and challenge my assumptions. Ask me questions that will let you most effectively coach and assist me in my role.

Encourage me to: [list the values and behaviors that make you successful in your role]

I want you to find the balance of: [traits you want in a thought partner and coach that will be both effective and fun to work with]

There’s nothing sacred about this prompt. It’s a starting point, and you should tweak it over time, according to how you want your copilot to behave. Maybe you want it to be sassier, or more skeptical of what you say. Maybe you want it to be more supportive or perhaps more wildly creative (or all of the above!). The key is to make it yours: treat these instructions as a set of knobs you can play with for the lifetime of your copilot.

Step 2: Onboard your copilot

Congrats! You’ve hired a copilot with a stellar personality and aligned values, and now it’s their first day on the job. Time to onboard them to the realities of your organization, team, and role.

Think: What would you provide to a new teammate in their first week on the job, before they’re assigned any particular initiative or responsibilities? What’s relevant to and informs all initiatives that you work on? We’ll start by uploading these documents to our project knowledge.

Here are some examples of valuable context for your AI copilot:

Your company/product’s landing page (use your browser to “save as PDF”)

Your company strategy deck (export as a PDF)

All of your customer segmentation and research insights

Research on your competitive landscape

Company vision, strategy, quarterly planning docs, etc.

Company processes

Your team’s org chart and roles

Your team’s past retrospectives

Your last performance review or informal manager feedback

Grab two or three that are readily available; don’t overthink it.

If you don’t have a lot of written documentation available, ask your LLM to interview you to make up for any gaps in its knowledge. Imagine this as having coffee with a sharp colleague a few days after they join the team—they’re probably bursting with questions.

Please review what I’ve shared with you and ask me questions to help complete your knowledge.

What important information are you missing (company- and product-level) that you need to help me across all my initiatives, present and future?

Focus more on company, org, and industry level. Focus less on short-term conditions or initiatives (e.g. resources, constraints, or individuals), as those will change over time.

I want you to ask me these questions sequentially (most crucial questions first), so I can answer one at a time.

When you’re ready to wrap up the conversation, ask it to generate a document that you can upload to your project knowledge:

Please create a document with the new information you’ve learned in our conversation. Only include new information learned in this conversation (i.e. that wasn’t already in project knowledge). Don’t outline any outstanding gaps in the document.

Optimize the document for adding to the project knowledge, so it can apply in all our future conversations.

Use exact quotations of my original words for the most salient and important things.

Once the document is ready, download it as a file, and re-upload it to your project knowledge.

Step 3: Kick off an initiative

Now that your new copilot is up to speed on the big picture, it’s time to involve it in a specific initiative.

I recommend keeping each initiative in its own chat thread. That way, your LLM can track the full context with the least amount of effort from you. You’ll use this dedicated chat thread to kick off, brainstorm, and make decisions with your copilot. It’s where you’ll update your copilot throughout the life of the initiative, and where together you’ll navigate the inevitable twists and turns. (Below we’ll cover what to do if you hit thread limits.)

Inside your project, open a new chat thread, and paste in the following prompt:

Now that you have the context on my company and my team, I want to tell you a bit about the initiative that I am working on and give you the specific initiative context.

This is the starting point of what I know, and I’ll be updating over time as more information and insights come in:

[Share what you know about this initiative at the start in any order.]

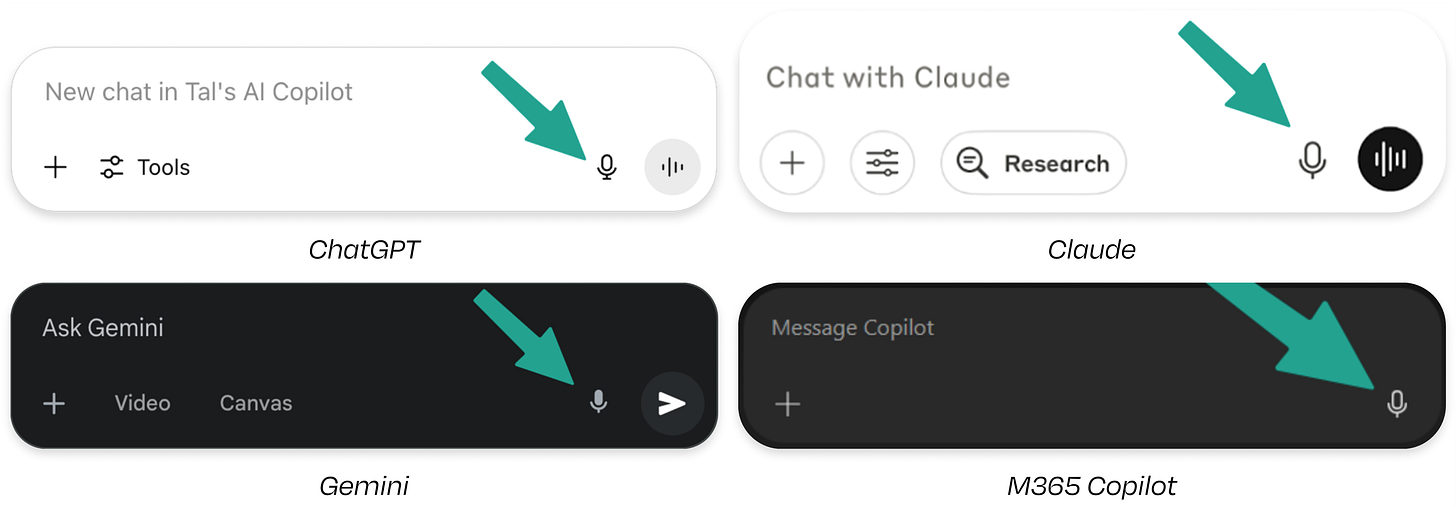

I recommend using the dictation feature (built into ChatGPT, Gemini, and M365 Copilot, or found in Claude’s mobile app) to ramble about what you know to get things started. Let loose about the problem, customer, background, stakeholders, organizational politics—everything that’s on your mind and relevant to this initiative.

Don’t worry about structuring your thoughts in a clean, professional way. Just get it out there in a stream of consciousness. I find that when I start to talk, I’m reminded of additional details I may not have included if I’d structured my thoughts prematurely.

At this point, people usually ask me what model to select. My answer is always: the smartest model available to you at work. We’re not using AI at scale here, so get the best brain you can, even if it’s considered “expensive” or “slow” by engineering terms. (Your only constraints should be where and how your company allows you to upload sensitive information.)

To recap: You “hired” your copilot with instructions, you “onboarded” your copilot with project knowledge, and now you’ve kicked off an initiative in a chat thread. With all this context, it’s time for the copilot to dig in.

Step 4: Put your copilot to work

With your initiative thread kicked off, paste the following into the chat:

What is the single most important thing I should do next?

Take a look at the results. Can you imagine sending that prompt to a blank LLM chat thread (before you had a copilot)? Since you’ve provided ample context and instructions, your copilot returns thought-provoking reflections, spot-on questions, and concrete next steps. In my case, since I told my copilot about my team members and stakeholders, it specifically calls out my colleagues by name (!).

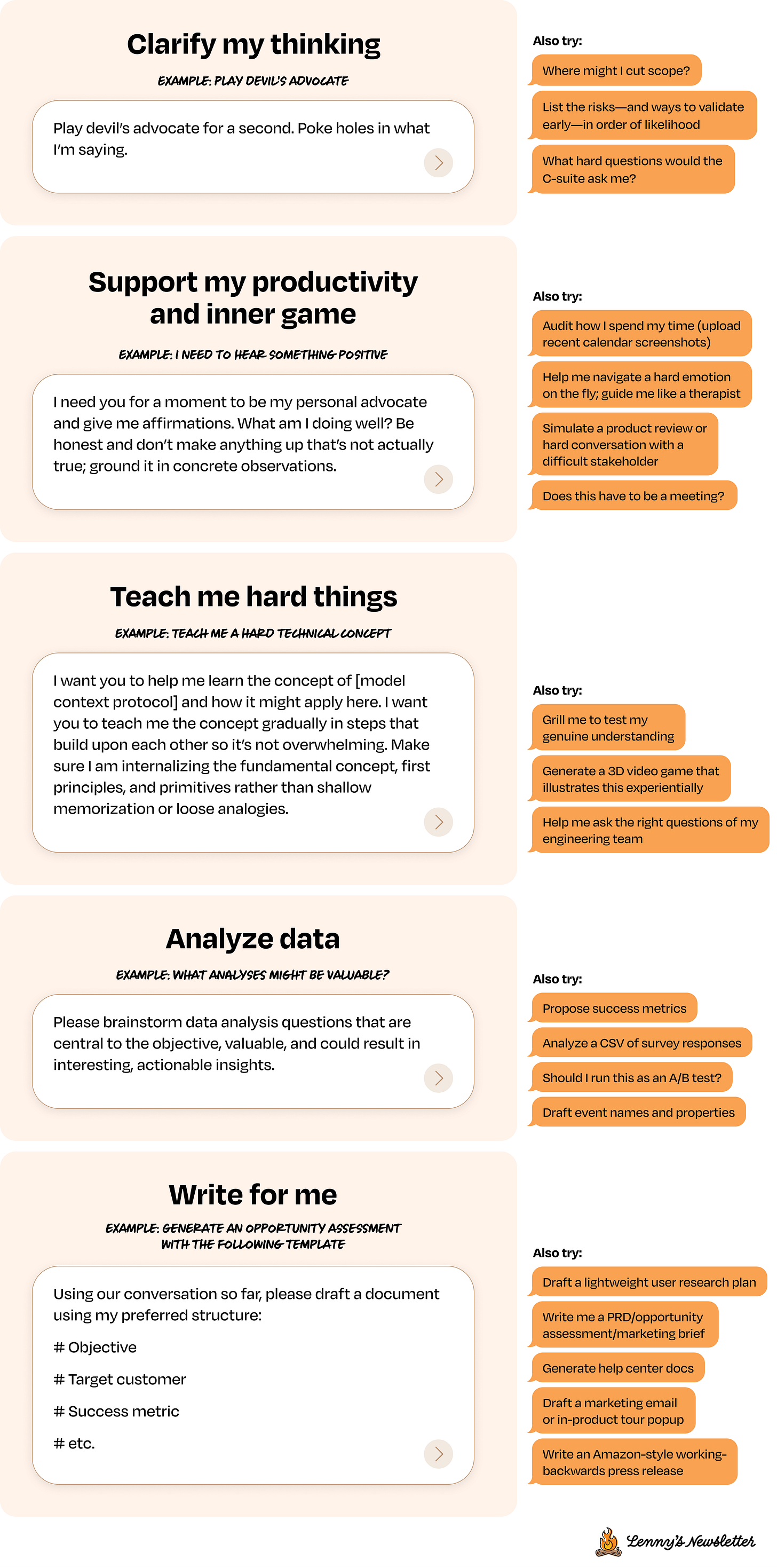

When we approach AI as a thinking partner full of relevant context, it can serve as endless inspiration. Here’s a list of use cases and example prompts to get your imagination going:

Notice how there’s nothing fancy about these prompts. Since the LLM has so much context, conversational one-liners are often all you need. Expect surprising and delightful moments. In the words of Karina Nguyen, an AI researcher who’s worked at both Anthropic and OpenAI, “Models are really good at connecting the dots.”

Other ways to leverage your copilot

Using your copilot to build AI prototypes

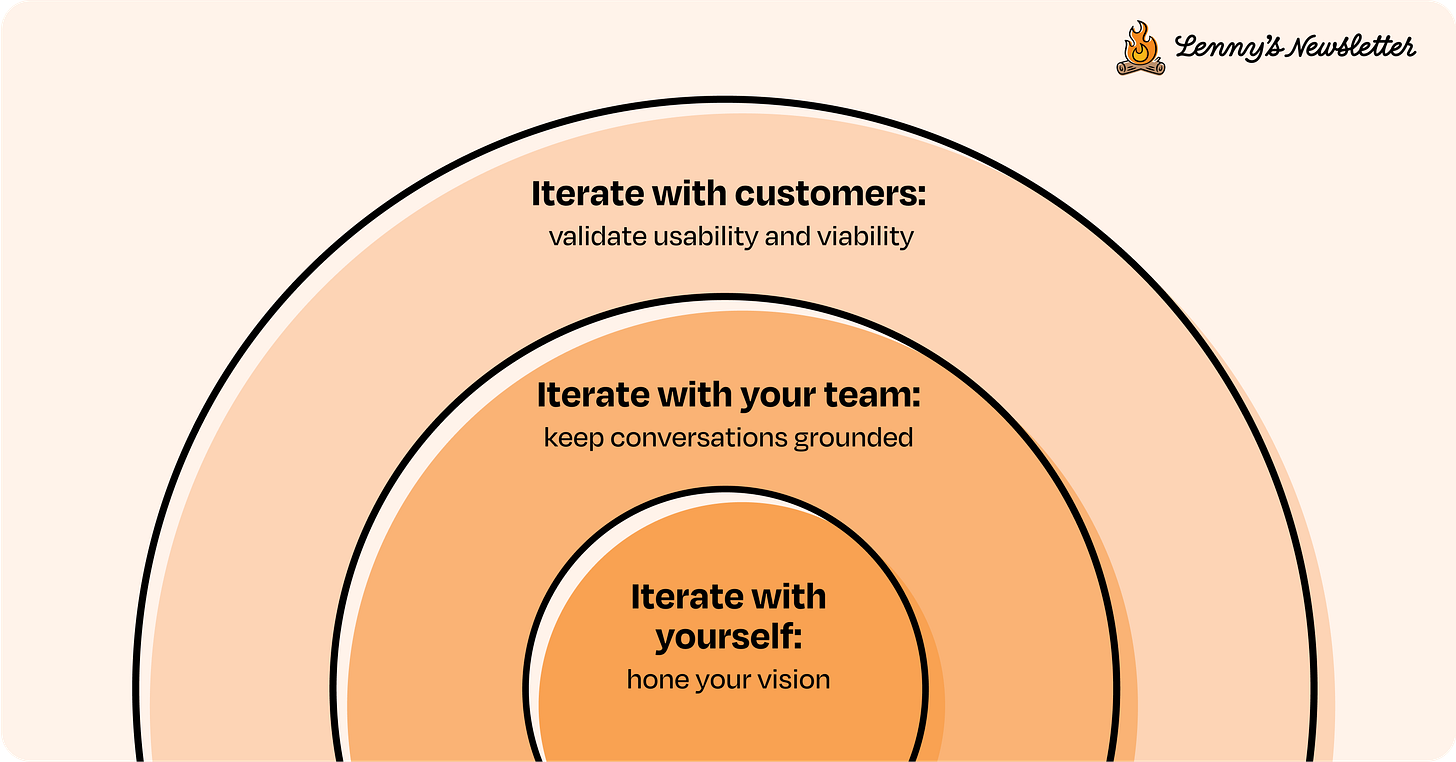

Using AI to prototype shortens the path to making something people want, in three ways:

Iterate with yourself: hone your vision

Iterate with your team: keep conversations grounded

Iterate with customers: validate usability and viability

AI tools for building prototypes (i.e. vibe coding) have gotten so good at this that the hardest part is articulating what you want.

Since our copilot has been with us on our entire journey, it becomes much easier to come up with a detailed description of our solution. Once you’ve converged on a direction, ask your copilot to create a prototype directly in the chat (e.g. Claude Artifacts or OpenAI Canvas). Continuing in the same conversation thread, fill out the following prompt:

Help me generate an interactive prototype of this idea.

We are going to build only the interactive, client-side prototype version of this (without deep functionality) to serve as a good internal feedback tool that can also help test the usability with customers.

Keep the scope extremely focused on what we’ve discussed explicitly. “Happy path” only for now.

[Optional: I have attached a screenshot of the current interface. Make sure it looks pixel-perfect and as similar as possible to the original.]

Don’t build it yet; make a plan first. If needed, remind me what other files or images or resources I should provide you to help you do an amazing job.

You can also have it generate a prompt to use in an external text-to-UI tool like v0, Replit, Lovable, Bolt, and so on. Just append the following lines to the prompt above:

Create a separate document with a prompt that I can directly paste into an external AI prototyping tool (all commentary should be outside, so I can just copy and paste the doc as is). This should be a developer-ready specification that can be used to build this interactive prototype.

Assume the tool has no prior context except what you include in the prompt.

Using your copilot to build AI automations

AI automations can take repetitive, energy-draining tasks off our plate. Unfortunately, they’re not magic genies, and shine under specific conditions. This makes it hard to get started, because the first challenge is thinking of a use case that is both genuinely valuable and also a good fit for AI automation platforms.

Did someone mention generating ideas based on personalized context? Sounds like a job for your AI copilot. You can use this prompt in an existing chat thread or start a new one as a throwaway brainstorm. Either way, it’ll base its ideas on your project knowledge:

Based on what you know about me and my organization, please brainstorm five ideas for an AI automation I can build using platforms such as Zapier Agents/Lindy AI/Relay app/Cassidy AI/Gumloop/etc. Limit yourself to what makes sense to build in those platforms.

These should help me, as a product manager, save time on draining yet essential tasks that take me away from more valuable, strategic, and creative uses of my attention and energy.

Ask yourself: What ongoing repetitive work requires some judgment and writing abilities but not my full expertise and intuition? Put another way, if my company assigned me a junior intern, what would I have them do?

Try to phrase each idea in one or two sentences, exactly the way you would in a message asking a junior intern to do something. Additionally, follow up each idea with a brief explanation of why you chose this over other ideas. Pitch me as the product manager in terms of business outcomes and the value of my time.

# IMPORTANT: These should be event-driven AI automations, not batch tasks.

Only suggest event-driven automations that process items one at a time as they arrive——with currently available information. Do NOT suggest batch tasks that process multiple items on a schedule (e.g. “every morning, scan all...” or “weekly, compile...”).

[Why: AI automations shine in one-at-a-time, repetitive tasks. They do best when designed for immediate responses to individual triggers.]

❌ WRONG (Batch Task): “Every morning, scan all new support tickets and summarize them”

✅ RIGHT (Event-Driven): “When a new support ticket arrives, analyze it and alert me if it’s urgent”

Before finalizing your suggestions, verify each one:

- Does it trigger on a specific event? ✓

- Does it process one item at a time? ✓

- Could it run multiple times per day as events occur? ✓

If any answer is “no,” revise it to be event-driven.

The only exception to this is if the end-result value is being delivered on a particular timeline, for example to centralize information and reduce noise (e.g. weekly update, daily brief on upcoming events, or weekly digest of changes). If you believe that a use case falls under this exception, please note your reasoning.

# Examples

Below are examples of use cases where product managers have gotten a lot of value from AI agents. Remember, these are just examples to inspire you to think of use cases that make sense for you.

1. Compile fragmented information that would require a lot of clicks:

“When a new message is posted in the #feature-requests Slack channel, distill the customer request into 2-5 keywords. Search those keywords in recent Slack threads, HubSpot conversations, and Gong snippets, and reply to the thread with links to what you find.”

“Every morning, scan my calendar for customer calls, and instead of searching the web, DM me with recent interactions from this customer in Salesforce, Gong, and Zendesk.” (Example of an exception where the value is the daily delivery timeline to centralize information.)

“Every Monday morning, prepare a competitor activity digest by scanning recent blog posts, App Store updates, and X announcements.” (Example of an exception where the value is the weekly delivery timeline to reduce noise.)

“When a customer churns, post a message in the #churn-lessons channel with recent support interactions, NPS rating and date, and churn survey response.”

2. Boring, Sisyphean tasks with high upside:

“Monitor the pricing pages of 5 competitors for changes.”

“When a bug nears its SLA deadline for the associated customer, ping this dedicated Slack channel and cc each respective customer success representative.”

3. Scanning exhausting amounts of data:

“DM me when a support case is resolved as a ‘product confusion’ reason rather than a technical issue.”

“Ping this channel when you see a feature request that showed up for the first time.”

“When an NPS survey text response is posted in the Slack channel, decide if it’s clearly a technical issue and, if so, create a support ticket in Zendesk.”

4. Drafting updates:

“Every Friday at 10 a.m., write up a summary of progress made across all teams in our project board, across all epics, changes made to scope, and highlighting any timeline changes.” [Example of an exception where the value is the daily weekly timeline to centralize information]

Notice the pattern? Good automations start with “When [specific event happens],” not “Every [time period],” unless there’s specific value in triggering on a daily/weekly/etc. cadence.

Scan the resulting use cases and use them as inspiration. Have a conversation! Engage with the results and push back on parts that don’t sit right with you. The goal is finding a use case you’re excited to make happen. With this in hand, turn on deep research mode, and ask your copilot to generate a personalized, step-by-step tutorial on how to construct the automation.

Gossiping to your AI copilot

Shit happens. A stakeholder changes their mind, new data arrives, or things turn out to be way higher-effort than planned. One hallway conversation can upend everything.

For your AI copilot to help you navigate, you’ll need to loop it in. I call this habit “gossiping” to AI, because I fill in my copilot the same way I’d lean over and vent to a colleague sitting next to me.

I love using speech-to-text here as well: “You won’t believe what just happened in my conversation with so-and-so. . .” That’s it. No structured format, no formal update process. Just the exasperated sharing that comes naturally.

Even if I don’t have any action items, my AI copilot remembers and references these updates later. Unlike with most coworkers, you can bluntly add:

I don’t want solutions right now; I want you to listen. Confirm with just a “yes.”

Gossip keeps the context fresh and effective.

Pro tip: I personally use my LLM’s native mobile app for dictation on the go. This makes gossiping feel as lightweight as sending a WhatsApp voice message to a friend or coworker.

Habits for getting the most out of your AI copilot

Getting the most out of our copilot depends on us, not the AI. The difference between an okay AI copilot and an exceptional one isn’t the tech—it’s the habits we build around it.

Cultivating an “AI mindset”

Your AI copilot will undoubtedly be delightful, but it most certainly won’t always be right. That brings me to the most important component of using an AI copilot: our mindset.

Working successfully with a thinking partner—human or machine—is about taking the good and disregarding the bad. If our car’s driver assist beeps loudly on a wide-open highway, it’s no harm done. We still keep it activated in case it alerts us to something on the road we didn’t see. Or think of your favorite mentor or confidant: we don’t constantly judge if their advice could “replace our thinking.” Rather, we focus on the parts of what they say that are valuable and inspiring.

Even when they’re wrong, LLMs can be weirdly inspiring and motivating. Often when I disagree with what my AI copilot suggests, it still gets me thinking. As with any outside advice, it spurs me to crystallize what I think I should do instead.

It’s up to us to apply taste and judgment based on hard-earned product intuition. To quote David Lieb (YC partner and founder of Google Photos), “Your gut is the world’s most sophisticated machine learning model ever created.” When working with generative AI, apply your craft and taste to identify what’s valuable.

If you’re frequently getting outputs you’re not happy with, ask yourself, “What context does the AI need to succeed here?” or “Does it have enough guidance to deliver results?” Since we’re using LLMs for their intelligence (rather than for their knowledge), it’s on us to think about what we should provide to set them up for success.

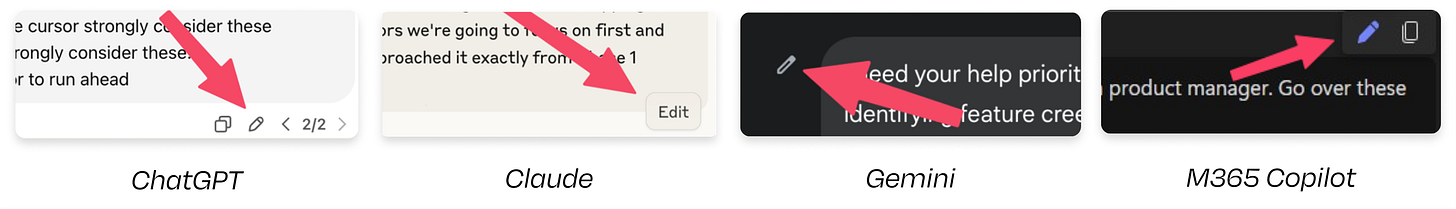

To tweak your inputs, hover your mouse over your last chat message and click the “edit” button (or ✏️ icon) found in all major LLMs. Provide a tiny bit more guidance or background information, and submit again. If you find the same gap happening over and over again, consider adding that information to the project knowledge.

Growing your copilot’s knowledge over time

Our investment in our AI copilot also earns compound interest. Beyond regularly providing context in each initiative thread, we can also make sure our project knowledge continues to grow, instantly benefiting current initiatives as well as those to come.

The end of an initiative is a great opportunity to update the project knowledge. Start by sharing the outcome, good or bad. Include any retrospectives your team ran. Turn on speech-to-text and add your personal reflections. Finally, ask it to output a “new lessons learned” document.

You can guess what’s coming next: download the document, and upload it to the project knowledge. When we add real-world lessons to our project knowledge, we accelerate the opportunities for our copilot to “connect the dots” and surprise us with delightfully wise counsel.

You can think of this as memory, but a transparent, curated memory that’s completely under your control for a professional context.

Note: What if you hit chat size limits?

Different LLMs have different context window limits. If you run into a limit, you can use a prompt to have the LLM summarize the conversation so you can start a new thread with the key context carried over.

To use this prompt, hover your mouse over your last chat message and click the “edit” button (or ✏️ icon). Replace with the following prompt, and hit enter.

Finally, start a new thread using the output.

This conversation has reached its context limit. Create a document that can serve as the initial context for a fresh blank LLM thread. Your goal is to preserve approximately 90% of the conversation’s value and context while reducing its length by ~90%.

Act as an expert handing off to another expert who will help me, and set them up for maximum success, as close as possible to having been there all along. Tell the new expert what instructions or behaviors to exhibit that I’ve implicitly or explicitly requested of you. Tell a chronological story.

Use your best judgment when it would be particularly valuable to use the original exact words of either the user or AI.

Skip any context that is already found in the project knowledge or in your system instructions, since this will be available automatically to the new thread.

Create a second, separate document with what you chose not to include, and why.

Where might copilots evolve next?

It feels like we’re only at the beginning. Every day I use my AI copilot, I bump into moments where I think, “I wish it could just. . .” From what I hear from my workshop participants, I’m not alone.

Today’s friction points reveal where the real opportunities lie for both founders and incumbents alike, and many startups today are gunning for these opportunities from different angles. Here’s my wish list for what comes next:

Make it easier to update project knowledge

Today, I’m the human API between my copilot and everything else. I manually recount what happened in conversations, export strategy decks as PDFs, and update my project knowledge when I remember to. It works, with a lot of work.

Imagine if your copilot could pull directly from new department templates, project management, or team messaging, dynamically updating as your world changes. I wish it could have all this information without getting confused, overwhelmed, or over-indexing to irrelevant details. I want information to flow the other way as well: what if thinking, planning, and scrapbooking could happen in the same place?

But what really excites me is transparent, editable knowledge that feels like a living document. Imagine today’s consumer “memory” features, but with professional-grade control.

I’m inspired by how Cursor works for coding: imagine selective context, where you choose which documents and initiatives your copilot considers for each conversation. Sometimes you want one feature initiative to take another into account; sometimes you don’t.

I want to benefit from being on a team

What if every teammate joining you had a copilot waiting for them that was 80% ready to rock? Instead of starting from scratch, picture shared knowledge layers curated by leadership. You’d still have your personal context, but now it sits on top of your team’s collective intelligence.

New PMs would start with company-specific templates, relevant frameworks, and real lessons learned. Over time, our copilots become smarter not just from individual experiences but from your entire team’s wins and failures.

Push me

Even with the best-constructed copilot, there’s that moment of writer’s block. What should I update it on? What question do I even ask? Building the habit is harder than building the tool.

I would love for AI to push me like a sharp chief of staff that doesn’t wait to be asked. Imagine checking in based on your calendar: “Hey, you have that stakeholder review tomorrow. Want to role-play scenarios?” Or pushing me to focus: “This would be a really good time to schedule user research and get input from the sales team.”

The opportunity isn’t in making copilots smarter, but rather in making them more connected, more collaborative, and more proactive. The future of AI at work may be more about asking better questions of us than offering better answers.

Context changes everything

Using AI at work shouldn’t mean sifting through generic responses or conjuring perfect prompts. The most fruitful way to think about using LLMs is like collaborating with an inspirational colleague who knows your company strategy, remembers that conversation with your manager from last Tuesday, and can challenge your assumptions when everyone else has gone home.

When we give AI the context it needs—and treat it like a partner sitting next to us rather than a magic oracle—we don’t lose our edge; we sharpen it.

Thank you to Alex Furmansky for being an amazing (human) thinking partner for these ideas. And thank you to Colin Matthews for lending his deep expertise on AI prototyping.

Appendix: What if my LLM doesn’t have “projects”?

While projects are supported by the major LLM providers on all paid plans, many tech workers (especially in Big Tech) don’t have access to projects as part of the internal tools they’re required to use to access LLMs.

If this is you, don’t worry. Projects are not a fundamental LLM capability; they are a UX convenience.

The simplest solution is to do things manually. Assemble your instructions and project knowledge in one long text file. Each time you start a new chat thread, start by pasting in the entire file. This is a decent approximation of how projects work behind the scenes (and way better than working without context). It’s not elegant, but hopefully you won’t have to do this very frequently (more on chat threads later).

For those who want a more adventurous solution: A few Big Tech employees have realized that while they can’t access projects, they do have access to AI-powered software development environments, like Cursor. They’ve realized that those contain all the same ingredients as the projects feature (knowledge, instructions, and chat threads), and they’ve repurposed these tools as thinking partners (using them with plain English instead of code).

(If the geeky approach sounds appealing, I’m hosting a free lightning lesson featuring live hands-on demos of how people are doing this.)

Thanks, Tal!

Have a fulfilling and productive week 🙏

If you’re finding this newsletter valuable, share it with a friend, and consider subscribing if you haven’t already. There are group discounts, gift options, and referral bonuses available.

Sincerely,

Lenny 👋

This feels like a useful read for somebody who's never used projects before in their AI tool of choice, but I think it comes up well short of helping you actually build a personal AI copilot. I say this mainly because projects have serious constraints in terms of the amount of knowledge you can actually upload to them, and the amount of knowledge required to be somebody's personal copilot seems like it has to be significant.

For example, I wanted to create a project in ChatGPT to house all of the images, articles, etc. that I came across showing interior design or architecture that I found beautiful. My hope was that by doing this for multiple years, I could develop a very clear perspective on what I value most when it comes to design, and use that to inform any future theoretical home building or renovation projects I would do. However, in actuality, my project could only hold 20 images, and that kind of killed the dream for me.

How am I supposed to create something that understands me intimately when I have such a hard limit in place?

I got 10 startup ideas reading through this article! Thank you!